I really hope Microsoft employees (from the Visual Studio team) or the management read this post.

We all are internally talking about it, but don’t say it out loud. I don’t like pretending speaking for everyone else,

Here’s a shocker: latest revisions of Visual Studio are failing to satisfy the video game industry. Which, last time I check, it plays an important role in the business of Microsoft ecosystem.

The detonator were these two tweets, the more I read was “YES I FEEL THE SAME!!!”. In fact, Casey Muratori seems to be thinking that as soon as Clang becomes usable on Win32, move; which is exactly what I’m also considering of doing.

Let’s keep aside the horrible and controvertial VS2012 UI redesign (which fortunately VS2013 now includes colours) or the CAPS LOCKS thingy. Those unfortunately contain a lot subjectivity when one wants to argue. But let’s keep our talk about the objective failures of Visual Studio.

Also, let’s clear something out: I will only focus on C++. Also, VS 2013 is still very new, so I will talk mostly about my experience with VS 2012. At a glance, 2013 improves things a little, but not much.

I mainly work with graphics software and low level stuff. I couldn’t care less about their jQuery integration, Javascript debugging, or even their C# IDE. From the look of it, you’re doing a wonderful job since comments seem to be positive. May be you do improve in those platforms, or may those are fanboys. I don’t know. What I do know, is about C++. And that area is underachieving.

Now that I’ve cleared that out, here’s a brief of the problems in VS; which I’ll go into detail, one by one:

- Horrible Compilation performance

- Excruciating slow Intellisense.

- Unusually high RAM consumption.

- No native 64-bit version

- High latency input (the UI becomes unresponsive when I’m typing very fast!)

Horrible Compilation Performance

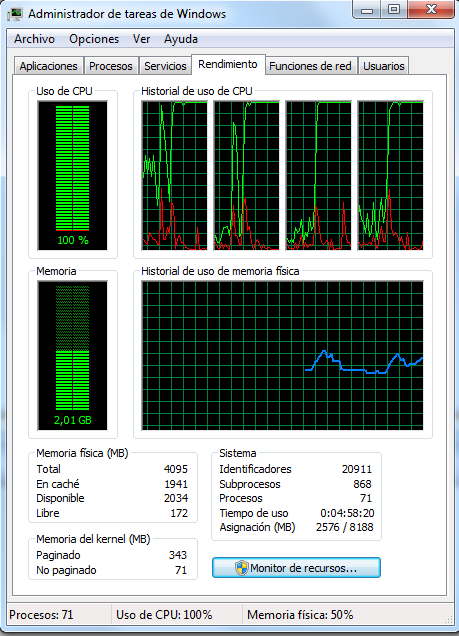

For medium sized to large projects, this is a real PITA. The following timings are for compiling Ogre 2.0 (OgreMain only), I forced the MSVC 2008 IDE (yes, it can be done) on both to maximize available RAM (and thus avoid HDD bottlenecks, msvc 2012 and its new build tools consume a ridiculous amount of memory).

Btw, the project already uses precompiled headers, since the usual response I see on the web is to turn them on:

Intel Core 2 Quad Core Extreme QX9650 @3Ghz, 4GB RAM. OgreMain only. Params /O2, /Ob /arch:SSE2, /MP. (No LTCG).

- Visual C++ 2008: 3 mins 1 second.

- Visual Studio 2012: 7 mins 29 seconds.

- Visual Studio 2013: 5 mins 58 seconds.

- GCC: 2 mins 11 seconds. (not using precompiled headers, Linux)

- Clang: 1 min 31 seconds. (not using precompiled headers, Linux)

So, more than double compile time between 2008 and 2012; and exactly the double between 2008 and 2013. That is a major productivity hit, not to mention this gets on my nerves every time I hit the F7 button. I’m not being fully fair here, since VS 2012 does produce better code than 2008; however GCC and Clang produce code of comparable quality, yet they take considerably less time.

The “buy a faster PC” argument is pointless. Clang, GCC and VC 2008 will always be faster in comparison. Not to mention VS2012 uses more RAM, which automatically means it can’t scale as well as the others will (it fights Moore’s Law).

The good news from the Ogre team, we support unity builds which bring every compiler down to 1 minute (VC 2008 being the fastest at 49 seconds, VS 2012 being the slowest at 1 min 29; while GCC and Clang are nearly at a tie in the middle 1 min 12 seconds and 1 min 20 seconds respectively)

But Unity builds are a sub-optimal solution, since they suck when one is working directly on the code because recompiling a cpp file means recompiling many.

Excruciating slow Intellisense

In the C++ world, “Go to Definition” is a powerful tool. Autocomplete and highlighting are too, but a C++ programmer would trade those two for Go to Definition almost any day. Its most usefulness comes when examining other people’s code, and when refactoring (since it allows us to quickly navigate through the source files in the same flow the code does).

Something I really liked about VS 2008 is that it would take some time before updating its intellisense database and work with outdated information. Why would anyone use this horrible behavior you say? Work with outdated information? Heresy!? Not really. When I’m refactoring and have to change a function (be its name, or its arguments), I need to change both the function definition and its forward declaration.

In 2008; I change the definition, hit Ctrl+Alt+F12, change the forward declaration; then I can go back and forth with Ctrl+Tab (or viceversa, i.e. change the declaration first).

In 2012; I change any of both, hit Ctrl+Alt+F12; wait while staring at the “Please wait while IntelliSense and browsing information are updated…” dialog, and then see I didn’t go anywhere because there’s no forward declaration that matches the modified definition. Now I look for the file myself (whether through Find in Files, and God only knows how many hits I’ll get; or by looking for the right file); and by the time I reached the forward declaration; I totally forgot the code that was on my mind. This is really frustrating.

The next problem with intellisense is this f***ing dialog:

I would love to see the VS dev team to use their own tools on real projects at some point, and not just a few Hello World.

Intellisense’s “Go to Definition” is too slow. Often it takes noticeable time (between 750ms and 2 seconds probably) while VC 2008 was nearly instantaneous (except for a few cases).

Like with the compilation time case, I’m not being fully fair. VS 2012’s Intellisense is much more accurate; while VC 2008’s has always been criticized for being inaccurate or unable to parse complex C++ syntax (or in simple words “just broken”).

But the truth is 2012’s is so slow and sluggish that I’d prefer 2008’s inaccuracy and speed over 2012’s accuracy and slowness.

Quick review on VS2013 indicates that it has gotten faster when doing read only queries (I can still see the annoying dialog after writing a bit of code), and most importantly, it does put me back to the forward declaration after modifying the definition (but not the other way around). It’s something I guess. Credit is due, where credit is due.

And yet again, Visual Studio fails when compared to competition: Qt Creator’s Go to Definition feature works as fast as VC 2008’s and as accurate as VS 2012’s. And it solves the refactoring problem by drawing a light bulb on the function’s line when the definitions and declarations don’t match so that you can make them match automatically. Worse performance and less features than a competitor.

It’s a double fail for Visual Studio team. Ouch.

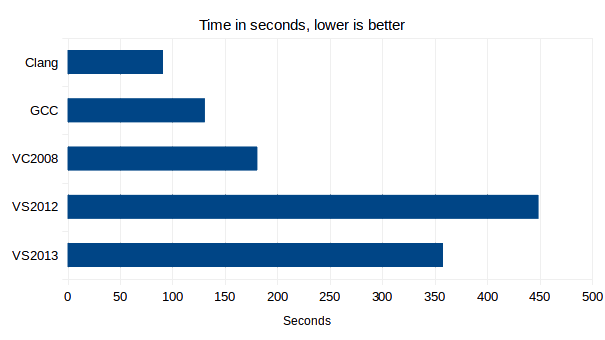

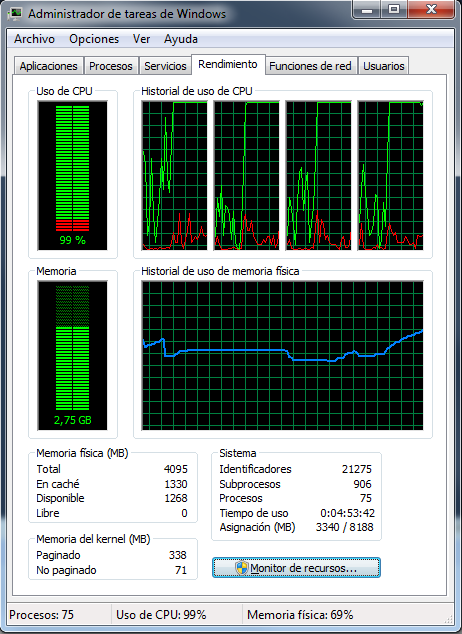

Unusually high RAM consumption

The IDE uses 3 times more RAM than Visual C++ 2008, and its compiler uses 2 to 3 as much RAM. Because running multiple instances of Visual C++ is actually quite common (normally 2, but sometimes up to 4; why so many? sometimes because this is required, sometimes the projects are not entirely related, sometimes is due to modularity, and sometimes is due to lack of 64-bit versions , see next problem), whereas it runs ultrasmooth and responsive with VC 2008 with just 4GB, VC 2012/2013 requires at least 12GB (16GB to get a good experience).

We’re talking about same projects, different IDE here. No excuses.

RAM consumption while having two instances of VC 2008 with two different projects, one of them is compiling OgreMain

No native 64-bit version

How is this a problem? Running out of memory (the infamous 2GB mark for user space applications) is much more common in VS 2012/2013 than in VC 2008. Projects that ran just fine crash the IDE after the solution upgrade.

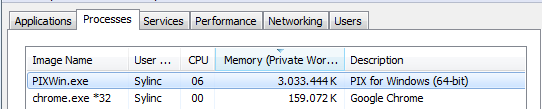

Still not convinced? Let’s talk about PIX: VS 2012 is now the replacement for PIX. But for anything other than small demos and small projects; running out of memory is very, very easy. I’ve hit 3GB usage with PIX. Very often it’s because I was leaking something big. But heck, I fired up PIX because I wanted to find the cause of a major problem, not to be nice to it!

But hey! At least there’s a hacked version of PIX that works with D3D11.

High latency input

The situation has improved since Visual Studio 2010, which was infuriatingly slow; still however ocasionally I can type and see how the keystrokes start appearing shortly after. I can also hear the HDD cranking up like crazy when that happens. I’ve upgraded to 8GB while writing this post, and the problem persists. And taskmgr shows plenty of available ram for caching files. So, Visual Studio… what the hell are you doing!?

If it’s so bad, why don’t you move?

Oh, I AM trying to move. And I cannot wait for Clang’s MSVC frontend to be finished. That’s the whole point of this article: If the VS team doesn’t improve these serious pitfalls, on the long run more and more developers will walk away.

But there are three things in mind:

- Visual Studio IS the default and standard compiler for the Windows platform.

- MSVC 2008 is probably one of the best IDEs ever made (including the compiler). I still use it on a daily basis. However as time goes on, less libraries ship precompiled for 2008, and MS is dropping support for its latest platforms (i.e. Win 8 and Co.)

- Truth to be told, MSVC has one the best, if not the best, debugger out there. It’s also truth that MSVC’s debugging performance has also gotten slower (evaluating an expression and single stepping keeps taking longer and longer on every iteration); so watch out for that too. But it is its strongest selling point. If Qt Creator or another IDE had the powerful debugging UI that MSVC has without all the performance pitfalls, VS days would be numbered.

Overall VS 2013 is a big step forward over the horrible VS2012 and VS2010; so there’s still hope; but they still have a long way to run to recover the competitiveness they once had. Mainly in the areas of compiler performance, which is still horrible and the game industry demands fast iteration and very low compile times; providing 64-bit version, and better refactoring facilities (how smart intellisense adapt to code changes, even if that includes analyzing older data).

You can use the 2013 IDE with the 2012 compiler, which does solve a few issues. I.e. IntelliSense is much faster, switching Debug/Release doesn’t take ages, and the like, and you get the pretty damn useful “Peek definition” which (suprise!) actually peeks at the definition (in 2012, Go to definition and Go to declaration is the same, as it always just jumps to the declaration.) However, there are still funny problems, for instance, there’s a switch header/source file. If you are in the source file and toggle it, voila, you’re in the header, if you press it again, it says it can’t find the corresponding source file, which kind of defeats the purpose.

However, this is actually not the main problem, and whether VS is 64-bit doesn’t bother me either, but what bother me are incorrect/long builds, bad code generation and lags during typing. VS delievers on all fronts here. I regularly run into issues where “Build all” forgets to update a precompiled header/dependency leaving me with a broken binary. Long builds are really long with Visual Studio as well, as it seems to force flushes to disk far more often than GCC/Clang (or it just has ugly access patterns.) (BTW: You can speed it up a bit by using the 64-bit compiler; using CMake, you just need to reconfigure and select the 64-bit compiler manually.)

Bad code generation: The code generated by Visual Studio is typically slower than what GCC & Clang create. Especially when it comes to math-intense parts where lots of small objects are generated on the stack, GCC & Clang start to win by 30..100% easily. This case is probably not that interesting when developing a larger application like “Word”, but when doing algorithm development, it is not uncommon to have a small compute kernel where a better code generation can give huge advantages.

Finally, lags during typing: No matter how much IntelliSense is going on, no matter what the IDE is trying, it should *never* hang during typing. I routinely alt+tab into sublime to type text while “Visual Studio is busy” or while Visual Studio is building. Frankly, if I have to type in Sublime Text, build on the command line, and only use Visual Studio for debugging, then the product is going into the wrong direction.

But I totally agree on the debugger, it’s the gold standard for debuggers with UI. The IDE itself is also quite nice (good use of screen space, I like the colored border when debugging/developing, navigation with tabs is fast; faster at least than with the list in Qt Creator, etc.) With 2013, IntelliSense is also really good (it hides private members when using a class for instance, yay!), and I do have quite some hope that the 2013 compiler will result in better code and give better compile times as well. Unfortunately, as I depend on Boost and Qt, I always lag a bit behind until they support VS 2013. I think they saw the signs, but it’ll take a while to turn a ship like Visual Studio around.

> I regularly run into issues where “Build all” forgets to update a

> precompiled header/dependency leaving me with a broken binary

We hit this problem semi-regularly. It’s a bug in the updating of the .tlog files that hold dependencies. I wrote a script that scans for bogus .tlog files and blows them away when detected. We run this on all of our build machines. I discussed this with Microsoft a lot and I’m hopeful that it’s fixed for VS 2013. We’ll see.

I agree the combo-list-based navigation in Qt Creator is inefficient, though fortunately, there’s also an extra window that shows them as a list. Not as efficient as tab based navigation, but it’s good enough for me.

Totally agree on the lag side. VS lags too often, and it should *never* do.

I didn’t realize about 64-bit builds. I should totally try them. Thanks for the tip!

Incorrect/broken builds when using precompiled headers have been there as long as I can remember (and very occasionally, even when not using precompiled headers). However I didn’t give it too much attention on VS2008 when rebuilding the whole project didn’t take much time (except the occasional swearing when the broken binary runs ok but suddenly crashes until you realize it just needed a rebuild).

But since compilation time has gotten slower, rebuilding the whole solution becomes a pita.

On VS 2010 the slow configuration switching is caused by doing thousands or reads from the .sdf database that holds Intellisense information. This is a SQL Server Compact database and VS ends up doing literally thousands of 4 KB reads. Xperf shows that nicely. Random access is slow. I’ve seen configuration switches take over a minute. I force my .sdf files to my SSD and that mostly solves the problem. They’ve improved the situation for VS 2013.

It’s the same problem that Windows Live Photo Gallery has:

http://randomascii.wordpress.com/2012/08/19/fixing-another-photo-gallery-performance-bug/

I highly recommend trying NetBeans. Better source control integration, better code navigation, better project management. (And it’s cross platform. And free. And has more language plugins.)

Perhaps when they’ll realise that their audience is less and less captive, that will reflect in their product, but for now, C++ VS always looked more like a “marketing” trojan horse:

– Better support for their tech, really c# support is wayyyy better than c++, it’s very impressive.

– Force install/doc of their tech (was Xaml/MFC and now in 2013 “advertising SDK for 8.1”, “signin”)

– The ever solution/project incompatibilities between version (backward but onward upgrade is cumbersome too) with reasons.

– C++ Support is very low quality, more often than not answer being “better if you do it our way”, (precompiled header, “capitalized” thing…)

Only thing improved lately is c++ standard support. (but it took a decade…)

Most if our programmers biggest problem when it comes to iteration times is the relinking if dlls that shouldnt be relinked… sometimes it relinks and sometimes not.. when it do relinks it can take up to four-five minutes if youve done the change in a dll that many other depends on.

you can work around this in a way by just relinking the dll that youve changed but as soon as youve changed code in a leaf dll you might end up relinking tons of dlls again

So changing a constant in a cpp in “engine.core” might take 3 sec compile time and 5 minutes linking where it should take max 10 sex linking

(we have quite a lot of dlls in our non-retail win64 builds)

We are using “bulk”-files as well together with a plugin that can extract single files from the bulk-files in order to increase iteration times (so the files you are working on is outside bulk). these files can then be readded to bulk again when the work is done.. this kind of gives the best of both worlds (as it is today)

We recently made the move from VS2008 to VS2012 at the studio where I work. It’s been pretty smooth and I haven’t had too many complaints. Though the debugger pauses that you mentioned are one that I noticed right away (5-10 seconds to press F10 and skip to the next line sometimes, though it usually goes away if I restart VS).

Linking seems to eat up a ton of RAM, I noticed on one of our build machines that the linker was stalled for long periods (near zero cpu usage for 1-2 minutes at a time) due to paging. The funny thing was there were still 5 GB of free RAM but I guess since we are building using the 32 bit compiler/linker it was unable to take advantage of as much RAM as it could have needed. I’m not sure whether this is an issue that has gotten worse under VS2012, but it’s not something I ever noticed being a problem in VS2008.

A few other minor things that irk me with VS2012:

– Hitting Ctrl-Shift-F, which is supposedly the hotkey for “Find in Files” often pops up the find dialog with “search this file only” selected instead of “entire solution”. Under VS2008 I never had this problem. It’s very frustrating because I usually don’t notice that it has changed the setting on me and I just sit there for a while thinking “there must be more matches”.

– They got rid of Macros. I had quite a few useful macros for automating different stuff which should be built in to VS anyhow (e.g. switching between .cpp and .h files, automatically adding the #include for the currently highlighted symbol). It was always a bit of a pain in VS2008 that you had to write these in Visual Basic. The alternative in VS2012 is to write an Add-In using C#. I prefer C#, but I’ve found creating an add-in to be kind of finicky (having to re-build and install the add-in each time I want to change or add something is too heavyweight for just doing my own personal hacks.

On the plus side, we are pretty heavy on C# tools with lots of DLL’s, which everyone builds on their machine as part of our build process, and it seems like there’s been some improvements on the C# building in VS2012 that have sped that process up quite a bit.

When you’re stuck writing code for console systems, who only support certain versions of VS, it’s not unusual ot need a copy or two of VS2008, at *least* one 2010 and two or three 2012 open simultaneously. Thankfully I have a hex-core machine, 3.2Ghz with 32GB of RAM otherwise I’d be knackered.

Since MSoft demand you use the latest VS with their latest console for development, compile times are ridiculously slow unless you fork out extra money to the Incredibuild guys for a license per seat to distribute builds.

Jesus- I remember when I was writing an entire game in <32Kb for a 3Mhz CPU and cross-compile on the PC was seconds, not minutes, or tens of minutes!

Brief (Great editor) was a DOS program that used less memory than the application I was writing. I guess these days when you code for the PC, instead of streamlining and optimizing, you just throw more (virtual) memory and CPU at it… Stupid, stupid. Moore would have a fit.

Matias, thanks for your thoughtful feedback and willingness to talk with the Visual C++ team here at Microsoft. Open, honest feedback can improve the experience for every Visual C++ developer. The team looks forward to continuing this discussion with you offline (and appreciate your quick response to our Twitter inquiry!).

Thanks also to other commenters. In addition to commenting here, you can submit a bug, share an idea or ask a question any time on our Connect site at http://connect.microsoft.com/VisualStudio.

Eric Battalio (ebattali@microsoft.com)

PM and Community Dude

Visual C++ Team

You have a $900 CPU and $40 of RAM?

Haha, I do get those reactions from time to time.

The CPU was given to me by Intel as a prize in one of their contests. In my Country (and the city I live in) getting the latest tech is difficult and expensive due to exchange rates.

an annoying bug I noticed too in VS2012. “Allow editing of read-only files” option is broken. I have it unchecked, but I still can edit read only files

What’s most irritating with Visual Studio and C++ is that it doesn’t have any refactoring support. That’s totally silly!

It can’t be that difficult since most modern IDEs have that. Netbeans can do that with C++! The development of Visual Studio is clearly more focused on C# and .Net, since refactoring support has been available for C# for a long time, but still nothing for C++ in new versions of VS

If it had proper support for refactoring, then you wouldn’t have to use Go To Definition to rename methods/classes manually, like described in the post.

Go To Definition is almost useless, since every time I use it, it takes secons and freezes the whole UI, and then I think “Why did I do that?”.. and avoid it like the plague, until I forget how useless it is. Also – this isn’t on some low end machine, a Lenovo with i7 and 16GB of RAM

MS and the devs of VS need to be asked more often about the missing features, maybe they will finally implement them if nagged about them often enough.

I’m curious what OS you’re running. I dual boot Win 7 and 8. When I installed VS2012, one of the first things I noticed that really puzzled me (and continues to) is that VS2012, when compiling a C++ project in Win7, is incredibly slow, just as you describe. But if I switch over to Win8, load up the exact same project, and rebuild, it’s DRAMATICALLY faster. Not only building: intellisense, Go To Definition, all of that stuff is quite snappy compared to the exact same thing in Win7.

I have absolutely no idea why that would be. But, for some reason, it is.

Intellisense has always been a joke: slow and/or inaccurate. I think Microsoft should have admitted defeat a long time ago on this front (for example, around the time when you had to delete the Intellisense DLL to be able to load projects at all; or when they bought another compiler to do the job, and still failed). Instead, they now read files in 4KB chunks and random order, like mediocre first year students. Embarrassing. I sincerely hope they at least tried to buy someone to do it for them, and nobody wanted to sell.

In my opinion, it’s pointless to complain about this, because if they could do something about it, they would have done it by now. Instead, look into Visual Assist (which, again, I hope Microsoft tried to buy). Yes, it costs $250, and it crashes VS every now and again, and it can slow down solution loading (even more), and it’s not 100% accurate, but it improves your VS experience a lot. Or at least my VS experience. 🙂

I think the IDE is hopeless at this point. In the last 15 years, C++ developers got 2 or 3 useful features, and lost edit&continue, so not a great tally. Either the product is managed by people who are just trying to impress their bosses with bling and don’t care what the users actually need, or all the good programmers left. Most likely, it’s both. Unfortunately, nobody tries to exploit this gap in the market: the “competition” is equally clueless, and they don’t have the luxury of having started with a decent product, such as VS6, so the other “IDEs” are just hilarious.

However, I’m pretty disappointed to see that the compiler is becoming crap too. In the past, it seemed like the compiler team understood what matters in the real world: compile speed and decent optimizer for general-purpose code (I for one don’t care much for compilers which can vectorize small loops, since I can do that myself with much better results, because I can also change the data layout). VC used to lead in these categories, so it’s sad to see they managed to fall behind there too. At least there’s hope from clang in this direction, unlike the IDE situation, where there’s no light at the end of the tunnel.

Fun times to be a C++ programmer…

VS6 the best for ever???

I want to search text in Visual Studio 2013, how is it helpful that function names are not included?

public const string XmlItems = “Items”;

I am searching on “item”, non-case-sensitive, non-whole-word. How is it helpful that “item” is only found ONE time by pressing F3 here?

Wake up.

Is that C# or C++? Because when in C++ I couldn’t replicate that behaviour.

I think really, any software developer should have a seriously beefy machine. Sitting around with 4GB of RAM and yelling at Microsoft? I mean…you make games right? If you do this as your dayjob you are probably on this damn machine 40+ hours a week…

So…how about just get a nice superfast machine and be done with it?

“The “buy a faster PC” argument is pointless. Clang, GCC and VC 2008 will always be faster in comparison. Not to mention VS2012 uses more RAM, which automatically means it can’t scale as well as the others will (it fights Moore’s Law).”

Cutting to the chase, I’ve bumped my 4GBs to 8GB. The problem remains. It’s not a problem of amount of memory, but rather of available of bandwidth; which is the reason why my colleague with the latest Core i7 & 16GB RAM complains as much as I do regarding VS’ compilation performance and lag when he types. It does not scale.

Buying a faster computer nowadays is no longer a solution as it used to be 10 years ago.

Even if we managed to get a super computer that compiles (eg.) Ogre in 10 seconds; I’ll chose Clang if it takes 2 seconds on that same computer. The point remains as valid as it was.

I know it’s been almost a year, but I just found this post looking for solutions to “this f***ing dialog” regarding intellisense. This is with my work computer which sits at 24 gigs of ram and 16 cores, and even then, compilation isn’t particularly fast and often hangs, debug mode often takes a few seconds to step over a line, and typing (typing!) lags often. I don’t think Matias upgrading his computer will help much at all.

Software engineers with beefy machines tend to make software that only runs well on beefy machines.

You guys are really hard to please! I have been programming using Visual Studio since 1995 (it was called Visual C++ at the time) and I’m very happy with VS2013 Update 1.

I love latest Intellisense; extremely useful, fast almost never makes mistakes.

Compilation for x64 projects seems to be – at last – well supported

Incremental builds are fast.

I don’t think it’s using that much memory, especially looking of how memory hungry all software seems to be those days.

Only “weaker” point I can think of is debug mode which could have been done better but still doing fine.

Of course I’m running a pretty recent computer i7 QuadCore with 16GB of ram, but nowhere expensive by any standards.

Also you can used Visual Studio Online to build your projects in the cloud – courtesy of Microsoft.

Took me a little time to set it up properly but it works and delivers you full built without using any local machine resources.

You must be doing toy projects. Half a million lines of code or more, and let’s see how much fun you’ll be having.

It would be “fine” if the issues were unavoidable, but they aren’t, Visual Studio is at this point a very capable but very low-quality software product. Unfortunately you HAVE to use it in many situations, because it’s official.

I have used Visual Studio since its first inception of Visual C++ 1.0, back in the late 1980’s. It came in a box with the cool infinity blue symbol followed by Visual C++ 1.0, and a T-Shirt. I am THE CUSTOMER. But sadly, starting with VS2010, and progressing on, the VS experience has deteriorated. VS2008 had the EXCELLEANT help. The MSDN-Help was FAST, and had EVERYTHING. Including tons of information on non-MS development, like javascript, HTML, Ajax, and then some. But 2010, took it away, and CRIPPLED us out of the box for those whose dev machine were not connected to the internet, or had slow internet. 2012 took it even worse. The HORRIBLE monochrome color, removal of installation projects, and SLOW SLUGGISH debugging was what we inherited.

But now, VS2013 is out, and this experience is even worse. Right off the bat, I could not get the damn thing installed. It would hang without any message. We had to call Microsoft, have them remote in, and THEY FAILED. They clung to a story that the machine was corrupted. So we rebuilt the machine, just to get it installed. And then MVC debugging was LOST, until Update 2 (yes TWO) came out. We literally could not put break points into MVC/cshtml files until Update 2, which we downloaded yesterday,

And now, it is all crashed. I can not build, it just hangs, no message, no timeout, no error, no nothing.

I’m sorry, but there is no excuse for this. People at Microsoft responsible for this, need to be moved off the project. My kindness and sympathy ends there.

Who cares about these problems when you’ve got stuff like :

– monochrome icons so you can’t easily distinguish them (man I miss those 12 monochrome Hercules monitors)

– ALL CAPS AND FLAT DESIGN

– Kanban boards and lots of Agile stuff baby !!!

Trolling aside, I have 16GB of ram and still bump in some of the issues described here (the Intellisense dialog is the most annoying one). Part of the problem is that there’s still no real competition to VS. I really hope this will change in the future (QtCreator now has a Clang back-end for code completion and its quite good from what I’ve seen) and put some pressure on them.

Can’t agree more. Thanks for sharing this info. This post does reflect on deterioration of Visual Studio. I hope it’s not the same with MS competitors.

I’ve not seen anyone mention that we’ll need to spend another few hundred $ to get some C++11 features, in VS2014!! I long for Clang on Windows.

I’ve limited myself to the technical side (i.e. the artistic design is not of my taste, but it’s much more subjective).

The price factor is relative. Although it is important, most paid features don’t change from one version to another, making Express versions a viable alternative for just compiling (furthermore you can use newer build tools with the older IDEs).

Furthermore, Microsoft has several programs (i.e. Bizspark) where for a fixed price subscription you get access to newest Premium releases without additional fees.

But I agree that paying for software that is undelivering is utterly depressing.

Nice article, so true. As one of the few *previous* proponents of VS in my company, I now dread writing code on Windows/VS. I also usually need to have at least two instances of VS 2013 running, and if I had to count all the seconds lost in productivity (including time spent writing this post), the 20 seconds here to simply alt-tab/ctrl-tab, 30 seconds there for the IDE to become responsive (which happens ever so often), minutes and minutes waiting for compilation, must add up to hours and hours of my time. Also, the fact that 1) highlighting a method/class, 2) hitting “Ctrl-shift-F” 3) waiting for the search of all headers and sources 4) moving the mouse over the find window 5) clicking on the desired line – is often faster than “go to definition” is another gripe. And let’s not forget Visual Assist which does everything 10 times faster than VS (or at least it did in VS2010) and has/(d) way more features even though it is a 3rd party plugin….On the plus side, I am glad that the new compiler(s) support(s) new c++11 features, but all the points you made make VS far from usable, and the developer far from productive.

Netbeans + Cygwin works for me though I hear compilation times are slightly better on Eclipse. As for Visual Studio its a joke though I have been known to hold my own opinion. Think of it another way C++ on the Java framework also gives you access to pretty much everything Java and Scala. Can’t go wrong there.

I think the test is not good enough to doom MS for the new Visual Studio. I can accept a 5 minute compiling time – if the result is what I need. Did you check the memory and cpu load of the result? Did you compare the speed of the result? No! I need an optimized result not a fast compiler.

As someone who also works in Xcode, let me tell ya, you don’t know how good you have it! Compile and go no longer really works, on-the-fly isn’t there, compiling is fast but exe packaging is *glacial*, and there’s all sorts of minor things in VS I miss on Xcode.

That said, is there a reason VS2013 has to be over 7 GB on my machine? I’m on a 128GB SSD, it hurts. The VS2010 install on the same machine is 500 MB. Is this normal? Any suggestions in slimming it down?

I’m actually about to fu….cking kill myself because of microsoft.. THE SHITTIEST CHOICE I MADE IN MY LIFE WAS TO GO WITH MICROSOFT , VISUAL STUDIO, DOTNET, C#, and all their STUPID UI SDK..

In concept they were right.. in practice.. THEY SHITTED ON EVERY LOYAL CODER THEY HAD…

LOL!!

Lazy programmers complains about lazy programmers, “buy more ram/cpu ” solution does not work anymore? Where are your brains? If your programm could not run on slow machine it is inefficient and you are brainless dolls.

I pretty much gave up on VS for editing code. I mainly use it for project management and debugging. Editing code is so much better in Eclipse with CDT. Despite Eclipse being its own bloated piece and written in Java, the CDT C++ editor does everything VS does, only faster. Code completion, analysis and navigation are at least on par, in many cases better. Eclipse’s outline view with incremental and wildcard searches is miles ahead of Visual Studio’s “Class View” and because of its almost limitless flexibility in project management, Eclipse teams just fine with MS compilers and build tools.

Also, Eclipse has better Git support and excellent support for other VCS which VS totally lacks. Downside: Eclipse can be a very tricky beast, especially for new users. Its flexibility is also its biggest weakness, because it can be *really* hard and frustrating to set everything up for new users. Once you’re “in”, it’s a great environment though.

Another possible alternative on the horizon would be IntelliJ’s CLion. It’s still in early stage, but already very promising. It’s code analysis and smartness in understanding code context is already better than the competition. Java people who have been using IDEA as their IDE know how smart an editor can be.

I am still working with VS6, and i will never change.

VS6 is perfect, ans will stay the best for many years.

make it free source please like VB6

Have you considered trying tools like IncrediBuild?

IncrediBuild’s new predictive mechanism highly optimizes Visual Studio’s MSBuild’s throughput for both Android and Windows-target C++ builds. This means that cross-platform builds executed with IncrediBuild ensure maximum utilization of cores in single machine, multiple machines, or even remotely. IncrediBuild’s higher throughput increases distributed execution scalability especially when processing multiple projects with inter-dependencies.

I’ve been using vs2008 for years, and I also had a install of 2010. I never really bothered using 2010, because it was generally a lot slower for coding. Input latency was a big deal, part of that is because vs2010 is compiling stuff in the background to show you errors. But recently I upgraded to vs2013. Besides the absolutely god awful GUI, wtf were these idiots thinking, the first thing that really stuck out was the slow compilation times. At a guess felt like 1-3x slower for medium size projects.

That was my experience as well – clang (XCode 5) builds my projects 3-4x times faster compared to MSVC 2013(runs on latest Lenovo with SSD). Hopefully MS will catch up as they always did but meanwhile I’m working on my mac. 🙂

I was only installing Visual Basic 6.0 on Windows 8.1 and decided to install Visual Studio 12 as some blog recommended. VB6 installed fine but 4 hours later Visual Studio finally downloaded and installed without a hitch. I tried to run it a few times and minutes later each time I figured this was like my free Web Developer 2010 that sucks for typing anything and have to paste edits its so horrendously slow.

And uninstalling VS13 took another hour. These MS people are nuts since Bill left.

I pitty you smart C guys and I will stick to VB6 forever!!!

I just upgraded to 2013 from 2012. I know it’s nearly the time VS 2015 will come out, but since I don’t intend to go to windows 10 I will be sutkck with VS2013 for a while.

The thinks I realized immediately is that clicking on a class name in the object browser often does not take you to the H file anymore. You have to click on a couple of other classes, then go back to the class you want and with luck it will not open the H file. Clearly a bug, and clearly there for a while but nobody at MS seems to care.

I’m using VisualAssist and without it, I would be lost. It’s the only thing that makes the VS IDE usable.

For me, the only 2 reasons I use it are:

1) many libraries come in versions for VS only (i.e. the Unreal SDK unless they have made progress with gcc by now)

2) The debugger is arguably the best in the industry. Gnu’s gdb is stone age compared with the VS debugger.

If these things were fixed, I would defect immediately. I have my eye on slickedit, although it is not as good as it should be, I used it some years ago and it was quite good. I would love to use gcc but the obstacles you have to overcome to make it work halfway at the comfort level of VS are just too great.

Personally I found vs 2013 to be a HUGe step backwards in terms of performance over 2010,. even a simple “Ctrl+Shift+F” can take several seconds before it even shows the dialog,. let alone the search itself.

Not to mention “shutting down” visual studio,. horrible.

It’s almost hilarious how slow the compilation time is compared to my delphi projects.,. but that’s more the language that is to blame than the compiler,. i guess.

Thx for this comparisons.

I have to work daily with the Microsoft c++ toolchain and it’s a real pain in the ass. We compile our code with clang on osx and the development is far more smoother and faster with this compiler.

Did you get the chance to time the latest msvc compiler ?

Cheers

Alex

Unfortunately no. I’ve been wanting to test VS2015 for a while now, but I’ve been very busy with a lot of stuff, to set it up and do proper testing.

Kind of late to the ‘party’ here but fantastic comments everyone. This thing is nice in some aspects but a royal PITA in others. It’s just too big, I miss the days when you could download just the C++ package if you wanted, instead of these broken, multi-gigabyte monstrosities.

Here I am, sitting out waiting for an executable packing that now takes two minutes instead of two seconds, after REMOVING code from one small class in a tiny project….

In a way it’s good there is so much bad software out there, there are many opportunities.

Alright, I’m currently struggling with Microsoft Visual Studio 2017 Community which supposedly supports Windows 7, however:

1. Microsoft Visual Studio tends to be sluggish.

2. Has a tendency to break and stop working for no obvious reason.

3. Crashes at random.

4. Has a tendency to throw unhelpful errors messages at any point (“Unknown error”, “Access denied”, “blah blah whatever stopped working…”) and usually completely fails to specify any further information leaving the user with an “ok” button to click and at a complete loss as to what is going on.

5. Now I will admit, the following is more a matter of personal preference, but still:

I’m a casual vb.net programmer with a passing interest in F#, C#, and C++ and usually don’t bother with code analysis or programming in teams. Certainly I can’t be the only one, but there’s no option that allows you not install these features. They’re simply force fed to the user. Grrr… I’m no expert but I get the feeling that Microsoft has tried to cram waaay too many features into one package here and it’s showing.

Once you finally get it to stop crashing, being unusably slow and configured several dozen options scattered everywhere it’s an okay piece of software and vb.net is an okay programming language.

Code::Blocks + Mingw-W64 works wonders!

C++ might be platform agnostic but that doesn’t mean M$ have the best implementation. Also .Net users have to pass extra lines of code that don’t exist in sudo generic C++ your practically adding more boilerplate to .Net apps. But hey if you can live with that I guess you get pretty gui frameworks that add absolutely nothing to the language reference.

2017 is even worse

I do agree